Paper

'Aerial Images Meet Crowdsourced Trajectories: A New Approach to Robust Road Extraction', Lingbo Liu, Zewei Yang, Guanbin Li, Kuo Wang, Tianshui Chen and Liang Lin, IEEE Transactions on Neural Networks and Learning Systems (TNNLS), 2022.

Abstract

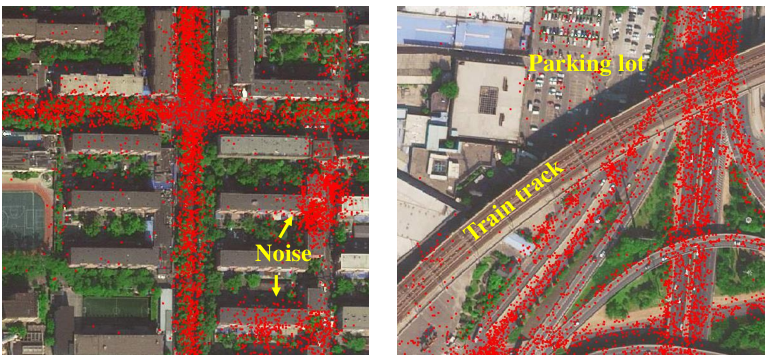

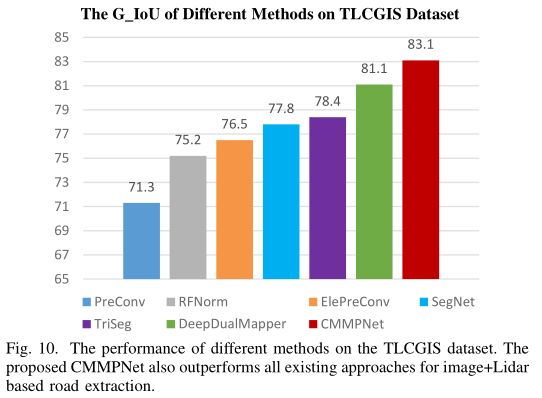

In this work, we focus on a challenging task of land remote sensing analysis, i.e., automatic extraction of traffic roads from remote sensing data. Nevertheless, conventional methods either only utilized the limited information of aerial images, or simply fused multimodal information (e.g., vehicle trajectories), thus cannot well recognize unconstrained roads. To facilitate this problem, we introduce a novel neural network framework termed Cross-Modal Message Propagation Network, which fully benefits the complementary different modal data (i.e., aerial images and crowdsourced trajectories). Extensive experiments on three real-world benchmarks demonstrate the effectiveness of our method for robust road extraction benefiting from blending different modal data, either using image and trajectory data or image and Lidar data.

Method

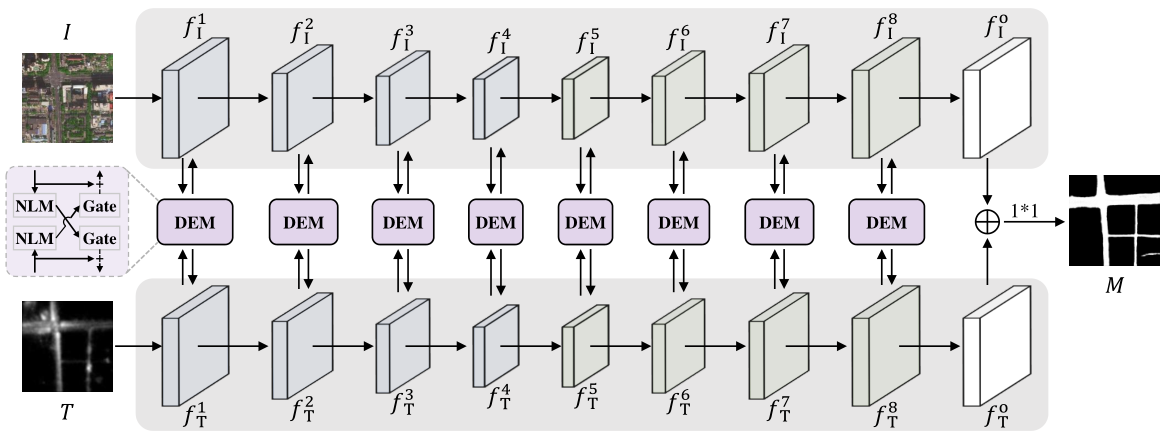

We propose a novel Cross-Modal Message Propagation Network (CMMPNet) for multimodal road extraction. Specifically, our CMMPNet is composed of (i) two deep AutoEncoders that take an aerial image and a trajectory heat-map respectively to learn modality-specific features, and (ii) a Dual Enhancement Module (DEM) that dynamically propagates the non-local messages (NLM, i.e, local one and global one) of every modality with gated functions to enhance the representation of another modality. The final features of the image and trajectory heat-map are concatenated to generate a traffic road map.

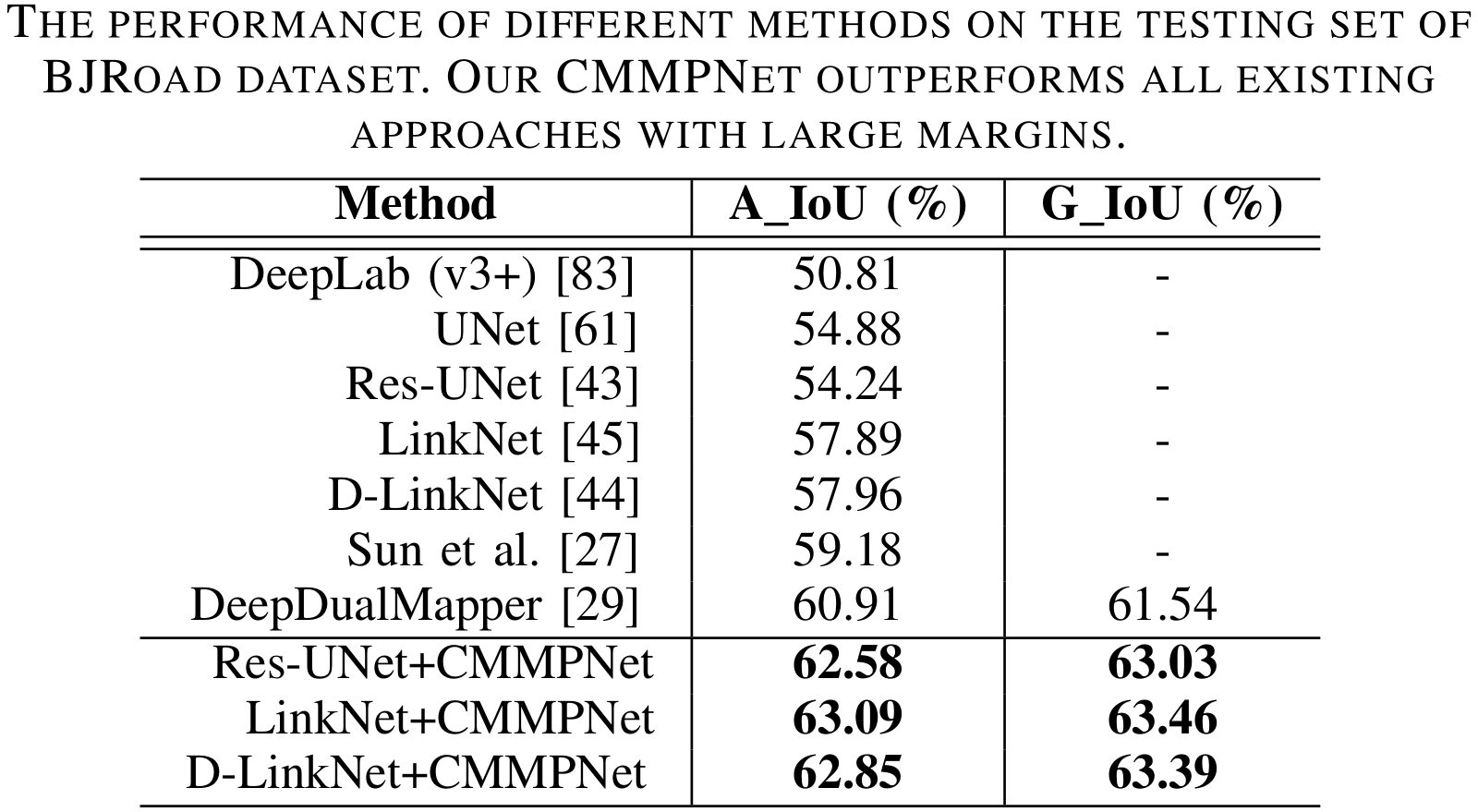

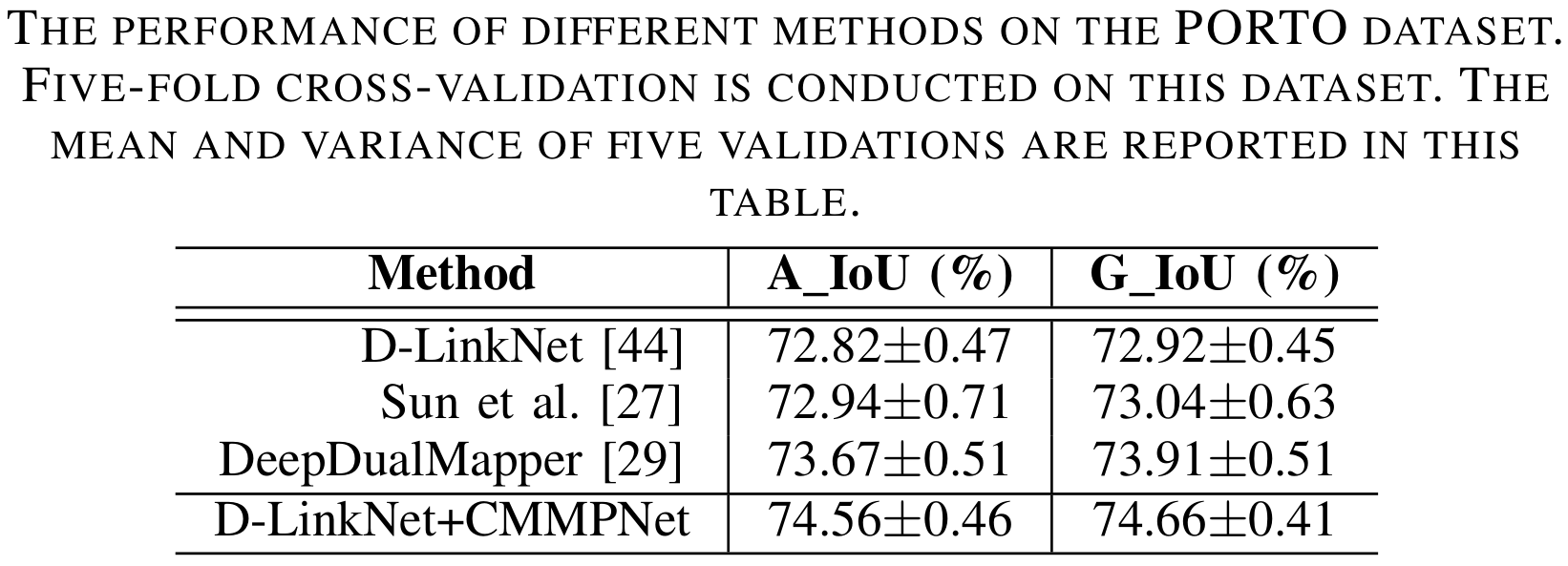

Experiments

- Leveraging crowdsourced gps data for road extraction from aerial imagery, CVPR 2019

- Deepdualmapper: A gated fusion network for automatic map extraction using aerial images and trajectories, AAAI 2020

- D-linknet: Linknet with pretrained encoder and dilated convolution for high resolution satellite imagery road extraction, CVPR Workshop 2018

Reference